Automating Local Processes with Ollama

Since the release of GPT-3, large language models (LLMs) have become integral to daily life. When OpenAI introduced a user-friendly chat interface, many jumped to predict the end of human involvement in various professions: software development, writing, editing, translation, art, even CEO, CFO CTO roles. While some still cling to these predictions, it's unlikely to happen anytime soon. However, LLMs are undeniably valuable tools.

Can LLMs Help with Software Development?

The simplest answer is to use something like GitHub CoPilot, which writes code for you. I've tried it and wasn't blown away. Sure, it can produce code better than a lot of low-quality attempts I've seen in over 20 years in the industry, but it's far from the best. Its output is mediocre, but does it really need to be perfect? Is it even solving the right problem? Is writing the code even the main bottleneck in software development? Not really. Most of a developer's time is spent reading code and navigating organizational hurdles, comprehending system design, looking for a defect in a system that does not produce any logs but still runs on production, not typing code. And yes, maybe some of those problems will be addressed with more advanced frameworks like AutoGen

While CoPilot can bridge gaps in a developer’s API knowledge, it struggles to keep up with the latest changes in packages. Microsoft is working hard to address this, but it remains a weak point. In my experience, CoPilot's autocomplete is inconsistent, and I often found myself spending more time fixing its output than thinking critically about the problem I was trying to solve. All this, coupled with the high price tag, left me unimpressed. Microsoft likely doesn’t even break even with what they charge, so expect prices to rise as the service matures.

Privacy Concerns

Another issue with specialized tools like CoPilot is privacy. Sure, there's a EULA, but in most cases, sensitive data needs explicit authorization to leave a protected environment for anything beyond hobby projects. CoPilot isn’t ideal in this regard. IntelliJ's recent attempts at local models are noble. same applies to everything that was done for VIM and Emacs (ok, ok... for VS Code too).

Flexibility and Customization A final frustration is how these models are locked into specific use cases. If your needs deviate from the product manager’s roadmap, you're stuck. Wouldn't it be nice if you could have a model that works in any custom scenario, without navigating API limitations?

This brings us to Ollama.

Why Ollama?

What if we had a tool that accepted any text input via stdin and produced stdout like traditional UNIX utilities—grep, awk, sed—but with some intelligence applied? That's where Ollama comes in. It's been around for a while, and many developers already use it.

Getting Started with Ollama

To install it on Linux:

curl -fsSL https://ollama.com/install.sh | sh

On Windows and macOS, head to Ollama's homepage, download the installer, and follow the standard process. (At the time of writing, there’s no Chocolatey package, and I haven’t checked Homebrew.)

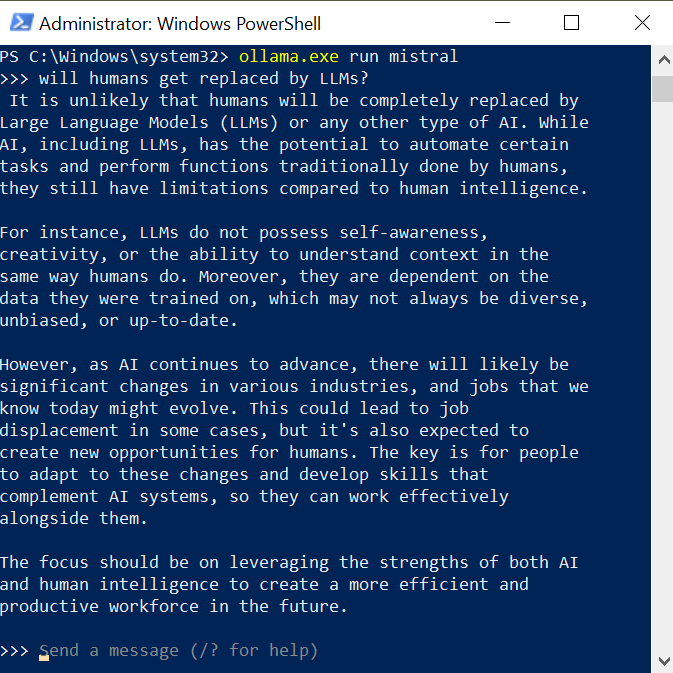

Once installed, you can run:

ollama run mistral

This downloads around 4GB of pre-trained model weights and starts a session with the Mistral model, which I’ve found to be solid for software development tasks.

But this is old style fun, just without web interface, how about that serious bash scripting?

Let's start with something simple like

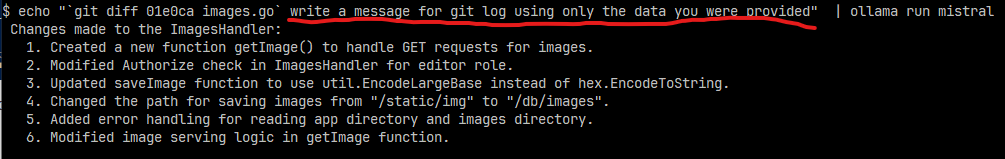

echo "`git diff 01e0ca images.go` write a message for git log using only the data you were provided" | ollama run mistral

I take git diff for a file and supplement it with a direction to the model, and the result is not bad. Much better than a log of messages in git log I saw. So no more generic change messages?

But can it be even better? Let's set a mark to the quality of the comment:

DIFF=`git diff goGet.go`

GITLOG=`echo "$DIFF write a message for git log using only the data you were provided" | ollama.exe run mistral`

SCORE=`echo "Score 1-10 if this is an informative git log message. Use only this data and return only one number. Don't explain. $GITLOG" | ollama.exe run phi3:medium`

echo "$SCORE"

And I got result 7 on a first run, 8 on the second. I can keep trying until its perfect or settle for anything less than that. And all of that can be done hands-off.

So sky is the limit.

Wait, but what if my machine (a laptop) is too weak to run a decent model like phi3:medium, or even the small ones take forever to generate? Am I out of luck and have to depend on greedy API providers?

First of all, you can use something like an external GPU if your machine supports either

- Thunderbolt 3+

- Oculink

- Has one unused nvme ssd m.2 port

get a GPU with as much VRAM as you can afford and enjoy.

What if my machine does not support any of that?

You can always run ollama server on a different machine. It is controlled with an environment variable

OLLAMA_HOST=hostname:port

You can make ollama available within your protected network by setting simple magic in your nginx (DON'T use that on production)

location / {

proxy_pass http://localhost:11434;

proxy_set_header Host 127.0.0.1;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

And for a server you can get anything starting from a used laptop or a desktop that you can get for cheap, or you can rent hardware from cloud providers (which is more convenient, but hugely more expensive)

And yes. Ollama exposes REST api that you can use. But that is simply stated on their own documentation page.

curl http://localhost:11434/api/generate -d '{

"model": "llama3.1",

"prompt":"Why is the sky blue?"

}'